How to check the quality of translators’ work without overloading project managers

The success of any business heavily depends on the quality of the goods and services that it offers. This statement is also true for translation agencies. If you earn a reputation as a reliable supplier who doesn’t cause problems for its clients, you’ll most likely end up with a stream of orders for many years to come, and your clients won’t risk working with any other translation agencies even if they offer lower prices. Conversely, if you offer low-quality services, you’ll have to be satisfied with occasional orders and lose clients to your competitors.

If, however, you offer high-quality translation services, then sooner or later, your volume of orders will increase to a level where you’ll begin experiencing a shortage of qualified translators and you’ll either have to turn clients away or jeopardize quality by sending your translations out to unprepared translators. In other words, you’ll stop growing. This article discusses how to avoid this by preparing new resources in advance for larger volumes of orders.

Quality control: the traditional approach

At agencies that check the quality of the services they offer, the process is organized as follows:

A text submitted by a translator is first sent to an editor and then (sometimes) to a proofreader, after which the most progressive agencies then carry out additional automated checks using special software (e.g., XBench, Verifika, LexiQA, etc.).

If this process is organized properly and if highly qualified translators and editors are engaged, it will ensure high-quality translations that clients, in the vast majority of cases, will be satisfied with. Therefore, once the above procedures are carried out, the project is usually forgotten, and everyone moves on to something new. And therein lies a strategic error…

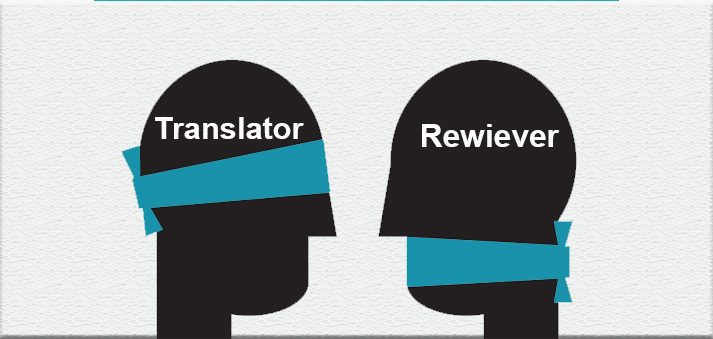

The problem is that the translator, who completed the very first stage of the work, doesn’t get any information about what happened to the file they submitted or what changes the editor made to their text. In the end, we’re left with the following picture:

The result

Translators become suppliers of raw materials and don’t grow professionally. They may even suspect that the agency isn’t happy with the quality of their work, but they won’t know how to improve it.

Editors continue correcting the same mistakes for months or even years on end. This is not only annoying for editors, but they also end up spending far too much time editing.

The agency experiences an acute shortage of qualified personnel: the quality of translators’ work doesn’t improve, too much time and money is spent on editing, and taking on newcomers seems like too big a risk to the agency’s reputation. In such a situation, managers would be not overjoyed but rather horrified when they receive a large, unexpected project from a new client.

With this approach, absolutely everything is lost in the long term. How can this be avoided? The answer is obvious: provide translators with feedback on the quality of their work and train them based on their mistakes. And this shouldn’t be limited to general comments like “we’re not satisfied with the quality of your translation,” since this, more often than not, is interpreted as a personal insult. You have to send them a complete list of corrections, and it would be desirable to also classify their mistakes and provide them with a quality score so that they can see an objective evaluation. Translators also need to be allowed to dispute the corrections and classification of errors in a reasoned discussion.

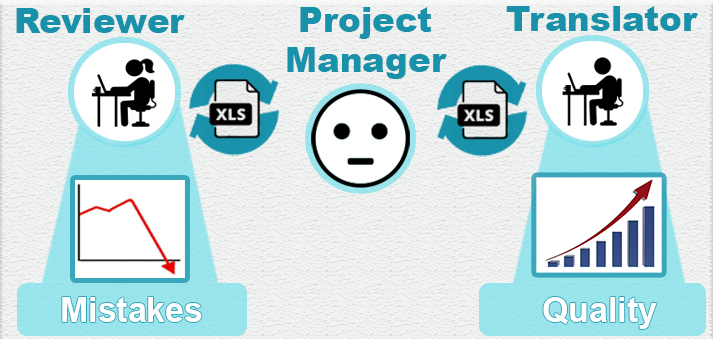

For now, let’s imagine that a translation agency is already implementing such a process:

That is to say that, after every project, the editor sends the project manager a list of corrections and the translation’s quality score, which the project manager sends on to the translator, after which the translator disputes several corrections and sends the file with comments back to the editor. Further discussion may take place in several stages, after which a final score is provided, which the project manager enters into the system to keep a record of the quality of the translator’s work.

This process makes it possible to improve translator qualifications and compile statistics on the quality of their work. So why the majority of translation agencies don’t use such a process?

The most obvious answer to this question can be seen in the expression on the face of the manager in the image above. They’re already loaded with work: they distribute tasks to translators, follow up on compliance with deadlines, resolve a range of technical issues related to projects, and communicate with clients. For them, this process is just another headache; after all, they have to constantly send messages back and forth between the translator and the editor as well as document the process. This is an additional investment of time that they don’t have. If the agency has a reasonable boss, they’ll understand this and won’t demand strict adherence to this procedure for every task. As a result, most translation work won’t be evaluated.

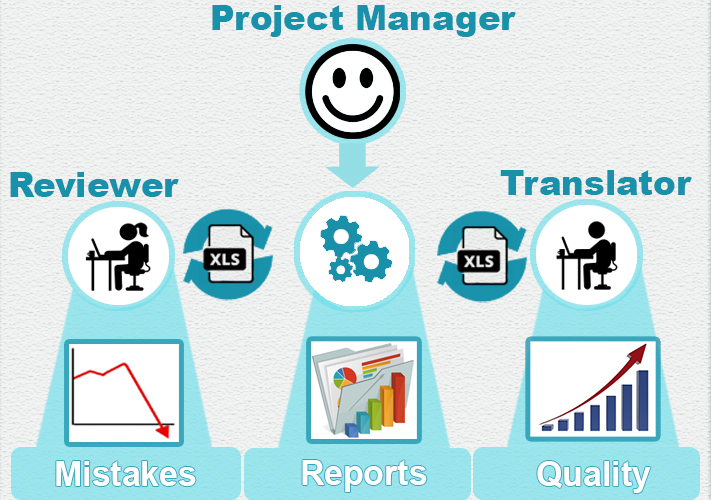

There are two options to relieve project managers of this additional work:

- Hiring someone whose job it will be to carry out this sort of mechanical work;

- Not hiring anyone, but instead creating and implementing a software program that will coordinate quality evaluations itself.

In the former case, the agency will have to take on the additional cost of hiring a specialized employee, while also risking an interruption in the process were they to leave. On top of that, software can handle this task much more efficiently. Picture the following:

In this case, the project manager simply tells the program to create a project to evaluate a job, presses the “Start” button, and that’s where their involvement in the process ends. The program sends instructions to the editor and the translator, coordinates their communication, and after some time sends the project manager a completed evaluation of the translation quality.

Developing such a system can also be costly, but a solution is already available on the market: TQAuditor. We’ll examine how it works below.

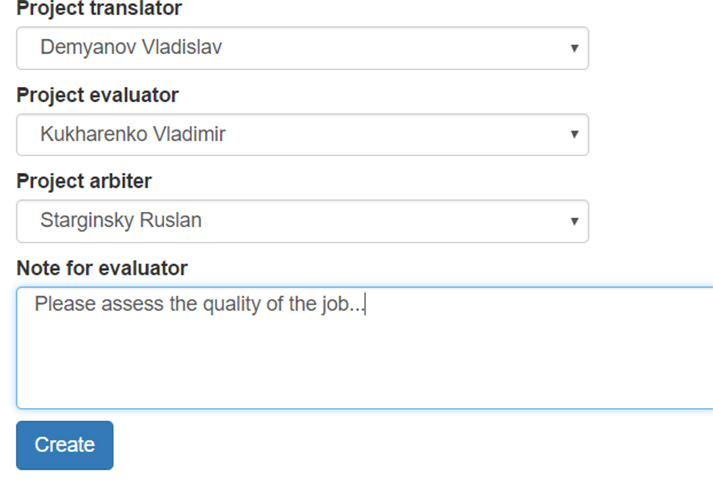

So, let’s imagine that we need to evaluate the quality of a translator’s work. To do this, we create a new project and choose the participants.

We press Create, and that’s it! After that, the project manager no longer needs to be involved. Meanwhile, the following takes place behind the scenes.

1. The system sends the editor a request to evaluate a specific translation.

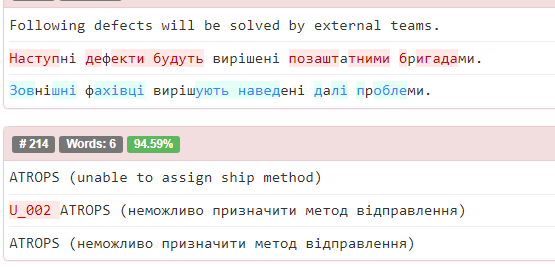

2. The editor loads two versions of the file (before and after editing), and the system generates a corrections report:

3. The system sends the translator a request to review the corrections. The translator logs into the system and studies the report.

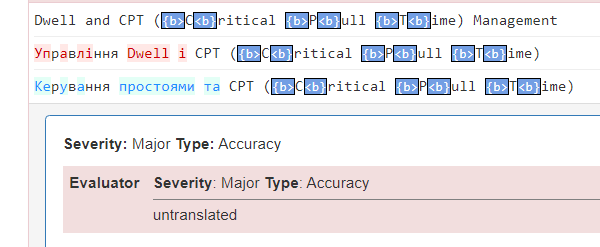

4. The system selects a particular number of fragments of the translation, and the editor classifies the errors:

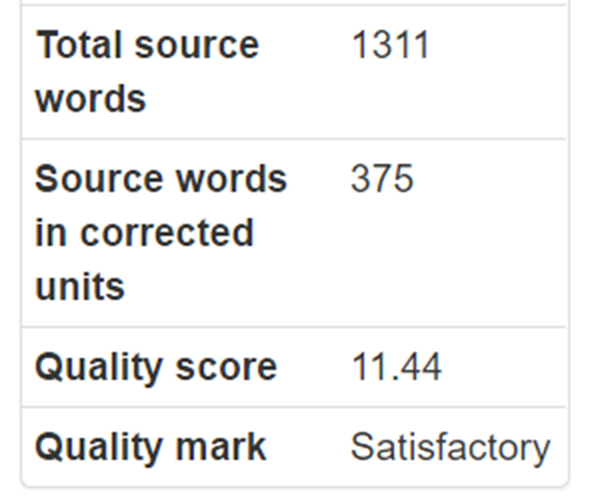

5. Based on the number of errors added, the system uses a special formula to calculate a quality score:

6. When the editor completes their assessment, the system sends a request to the translator to review their errors.

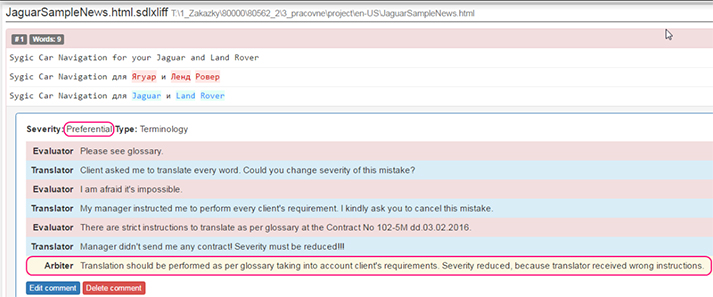

7. If the translator disagrees with something, they can start a discussion:

(This discussion is anonymous; the translator and editor can’t see each other’s names.)

8. When the discussion is over, the project manager receives a message with the final evaluation.

The project manager was not involved in any of the processes described above. They only created the project, which took no more than a minute, and handed over the boring, routine work to the system.

With this sort of approach, a single project manager can coordinate up to 500 quality assessments a day. This sort of time savings allows to support the process of training translators without significant costs for the company.

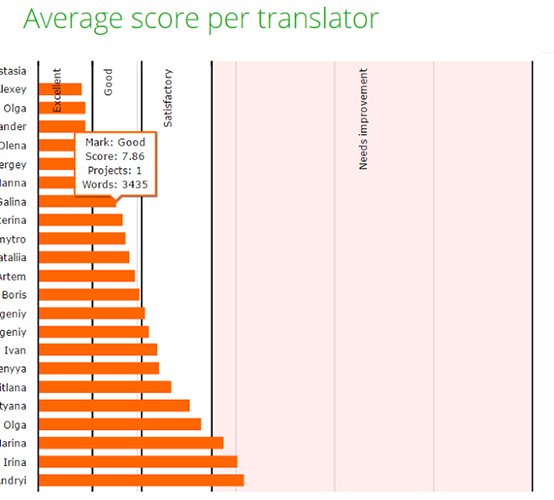

In addition, all evaluations are stored in the same system, and so they can be accessed at any time. As information is accumulated, it becomes possible to generate a variety of reports on both translators and editors. For example, you can see which translators received high scores in the previous month or which editors are the strictest and assign the lowest scores:

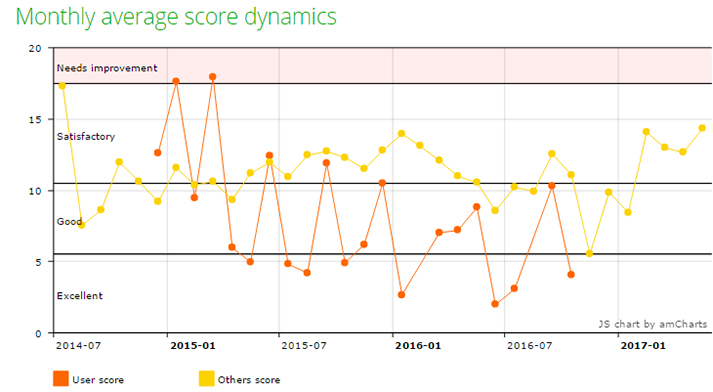

It’s possible to see how a translator’s score has changed over time:

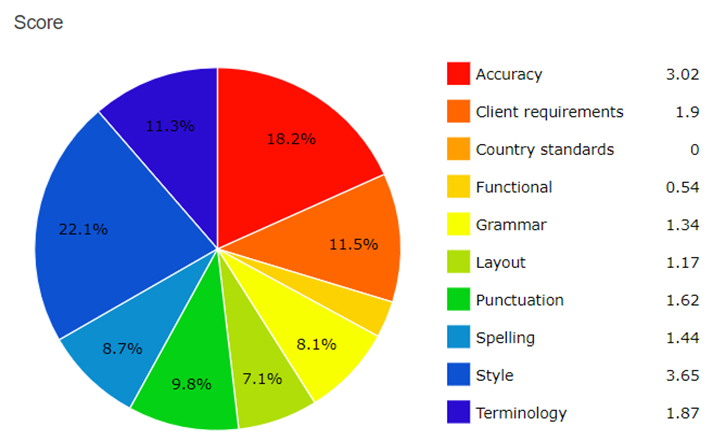

You can get information on the kinds of mistakes that a translator makes:

In addition, the system makes it possible to see the correlation between a translator’s assessments and the subject of the translation and who did the evaluation. Similar reports may also be generated on editors: what the average score of their evaluations is, what errors they identify most often, which translators get the highest scores from them, etc. Every editor and translator can also see reports on their work.

The reporting function is a powerful tool that enables the agency’s management to understand very clearly what is happening in terms of work quality within their company. Everything becomes very transparent since, in just a few minutes, they can find out, how good is work of a particular translator, without even working with them.

Why is all of it necessary?

TQAuditor makes it possible to automate as much as possible the process of training and evaluating the quality of translators’ work. However, using it will still entail certain additional costs, since it will be necessary to pay for the work of the editors who classify and discuss errors with translators. Wouldn’t it be easier to do all this the old-fashioned way and save some money?

It all depends on what you are trying to achieve. If you simply want to earn more right now, then it would certainly be better to skip this procedure. However, if you want to build a strong team of translators and editors who, in time, will be able to complete larger volumes of high-quality work and that will allow your company to grow, then there’s no other way to do this except to invest in your employees. Otherwise, you will be constantly facing a shortage of qualified translators, and you will end up turning down or losing out on future orders. Your business won’t grow if the people involved in it don’t grow.